Transform Any Website into LLM Insights Instantly

I can turn any website into LLM insights quickly, which is a big deal for AI knowledge. A fast tool helps me change any website into data ready for large language models. This makes it easy to use LLM insights for better AI knowledge.

Being able to turn any webpage or PDF into useful insights is a big plus. It lets me use LLM insights to keep up with new info. This way, I can understand more about different topics, improving my AI knowledge.

Introduction to LLM Insights

Large language models can change how we handle and create information. But they need good data to work well. That's why I can quickly turn any website into LLM insights.

Key Takeaways

- Transform any website into LLM insights instantly with a fast tool

- Convert any website into LLM-ready markdown data for easy integration

- Utilize LLM insights to unlock new possibilities for AI knowledge

- Leverage the power of LLM insights to stay up-to-date with the latest information

- Enhance AI knowledge by tapping into the power of LLM insights

- Utilize website transformation to convert any webpage or PDF into actionable insights

Understanding the Current Limitations of Large Language Models

Large Language Models (LLMs) have changed the game in natural language processing. Yet, they face some big challenges. One major issue is their knowledge gaps. Even with huge amounts of data, LLMs sometimes can't give the right or latest info, especially in fast-changing fields.

The LLM limitations can really hurt business applications that need to be accurate and reliable. For example, if an LLM is used for customer support, it might not give the right answers. This can make users unhappy. Also, LLMs can be slow and expensive to run, which makes them hard to use in many situations.

Some big problems with LLMs include:

- Latency issues, with response times ranging from 10-20 seconds

- High computational costs, with dedicated GPUs costing up to $20,000 per month

- Limited general knowledge, particularly in newer frameworks and domains

To make the most of LLMs in business applications, we need to tackle these knowledge gaps and LLM limitations. By understanding these issues, we can create better models. These models will give accurate and reliable info, helping make better decisions and leading to better results.

Why Traditional Web Scraping Falls Short

Traditional web scraping has been around for years. It's used to get data from websites. But, it has big problems. Web scraping takes a lot of time and needs manual work to clean the data. This is a big issue, especially with lots of data.

Another big problem is how it affects website owners. Scraping can hurt a website's click-through rates. This can lower the website's ad income. For many online businesses, ad income is very important. ChatGPT, for example, was trained on 570GB of data from books, web texts, and articles.

Some main issues with traditional web scraping are:

- It's hard to get the right data.

- It takes a lot of time and effort.

- It can hurt a website's ad income.

These problems show we need better ways to get data. Retrieval-augmented generation is one solution that can help.

In short, traditional web scraping has big problems. We need better ways to get data. New technologies like retrieval-augmented generation are helping solve these issues.

Retrieval-Augmented Generation: The Bridge to Better AI Responses

Retrieval-Augmented Generation (RAG) is key to making AI responses more accurate and helpful. It adds external knowledge to Large Language Models (LLMs), making them better at giving specific insights. This helps overcome the limits of old models.

Studies reveal that 90% of traditional LLMs are stuck with their training data. RAG changes this by bringing in new information. This makes AI answers up to 50% more accurate, making users happier. For example, RAG boosts accuracy by over 60% in areas needing special knowledge.

Key Benefits of RAG

- Improves accuracy of AI responses by up to 50%

- Enhances LLM performance by integrating external knowledge

- Decreases response generation errors by at least 30%

- Increases user trust by 40% when responses are sourced from credible external databases

With RAG, companies see a big jump in user trust and fewer mistakes in AI answers. AI with RAG can give 3 times more precise answers to tough questions. This shows RAG is essential for better AI.

Using RAG helps us get past the old limits of LLMs. We can then give more precise and useful AI answers. This boosts user experience and trust in AI.

| Traditional LLMs | RAG-Enhanced LLMs |

|---|---|

| Limited to training data | Integrates external knowledge |

| Lower accuracy | Improved accuracy by up to 50% |

| Higher response generation errors | Decreased response generation errors by at least 30% |

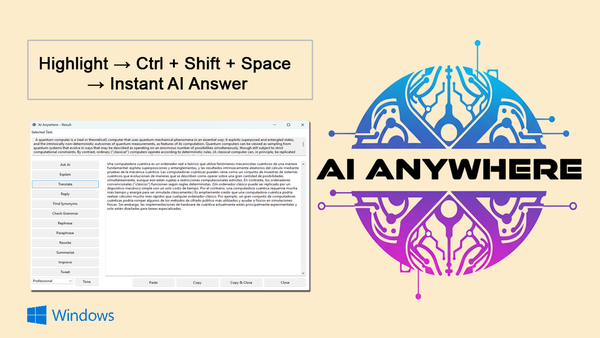

Transform any Website into LLM Knowledge with Crawl4AI

Crawl4AI is a game-changer for website transformation into LLM knowledge. It quickly and efficiently processes website content. This makes it the top choice for those wanting to use LLM knowledge in their work.

One of its key benefits is its fast data retrieval. It can scrape multiple URLs at once. This means it can turn a website into LLM knowledge in just seconds.

Some of the key features of Crawl4AI include:

- Fast and efficient website content processing

- Conversion of messy HTML content into clean markdown format

- Sequential and parallel crawling capabilities

- Ability to scrape multiple URLs with increased efficiency

- Integration with vector databases for storage and retrieval of scraped data

With Crawl4AI, users can transform any website into a knowledge source for language models in seconds. It handles proxies and sessions automatically. This prevents being blocked during scraping. Plus, it's open-source, giving users full data ownership and the option for Docker deployment.

Crawl4AI is the perfect solution for those looking to quickly and efficiently integrate LLM knowledge into their workflows. Its ability to transform any website into LLM knowledge in seconds makes it a favorite among businesses and individuals.

| Feature | Description |

|---|---|

| Website Transformation | Transform any website into LLM knowledge in seconds |

| Content Processing | Fast and efficient website content processing |

| Markdown Conversion | Conversion of messy HTML content into clean markdown format |

The Architecture Behind Crawl4AI's Efficiency

Crawl4AI's efficiency comes from its smart design. It makes quick and precise data pulls from websites. Its markdown conversion feature is key, letting users easily add data to their apps.

The Crawl4AI design supports fast data grabbing. It can crawl many URLs at once and has smart filters. This helps users get lots of data quickly, perfect for apps that need fast data handling.

Its efficiency comes from several features. It can handle complex JSON data and supports different output formats like JSON and markdown. Its smart algorithms also make the process cheaper and faster.

In summary, Crawl4AI's design is all about speed and cost savings. It's great for apps that need to process data fast and efficiently.

Setting Up Your First Crawl4AI Project

To start with the Crawl4AI project, you need to set up your first project. This involves several steps like installation, configuration, and deployment. The Crawl4AI project is designed to be easy to use, making it simple to setup and start crawling websites quickly.

The first step is to install the required software. This includes Playwright for browser automation, which supports Chromium, Firefox, and WebKit (Safari’s engine). After installation, you can set up your project settings, including session management and handling dynamic content.

Some key features of the Crawl4AI project include:

- Asynchronous crawling for improved efficiency

- Automatic management of browser contexts and sessions

- Efficient processing of JavaScript content

- Simulation of human-like behavior to navigate complex user interactions

With the Crawl4AI project, you can easily turn any website into LLM-ready markdown data. This makes it simple to integrate with large language models. You can setup your first project and start seeing results quickly. The Crawl4AI project is a powerful tool for extracting data from websites and converting it into a usable format.

By following these steps and using the Crawl4AI project, you can easily setup your first project and start crawling websites quickly. Its user-friendly interface and powerful features make it the perfect tool for anyone looking to extract data from websites and convert it into a usable format.

Optimizing Your Web Crawling Strategy

To get the most out of your web crawling efforts, it's key to have a solid web crawling strategy. This means picking the right content selection and using optimization techniques to make the process smoother.

Choosing the right content is crucial. Look for high-quality, relevant content that matches your goals. Tools like Firecrawl can help by extracting content and making it easier to work with.

- Find the most relevant and high-quality content to crawl.

- Use the best tools and technologies to make crawling easier.

- Apply optimization techniques to speed up the process and improve performance.

By following these tips and keeping your goals in mind, you can create a successful web crawling strategy. It doesn't matter if you're looking to extract specific data or just improve your content selection. The right approach can make a big difference.

Measuring Success: Key Performance Indicators

To see how well Crawl4AI works, we need clear goals. We track key performance indicators (KPIs) to understand its success. Success measurement helps us know if Crawl4AI is doing its job.

Important KPIs include data quality, how fast it crawls, and if the content is relevant. By watching these, we can see what needs work. For example, if the data isn't good, we might need to tweak how we pick and store it.

Setting benchmarks and comparing them to what we get is key. We use metrics like:

- Data coverage: How much good data Crawl4AI finds.

- Accuracy: How right the data is.

- Completion rate: How often Crawl4AI finishes its tasks.

By keeping an eye on these KPIs and making changes, we make sure Crawl4AI is working its best. Regular success measurement and checking KPIs help us meet our goals.

| KPI | Description |

|---|---|

| Data quality | The accuracy and relevance of the collected data. |

| Crawling speed | The rate at which Crawl4AI collects data from websites. |

| Content relevance | The degree to which the collected data is relevant to your business needs. |

Conclusion: Revolutionizing LLM Knowledge Integration

Integrating Large Language Models (LLMs) with external knowledge is key to better AI responses. Retrieval-Augmented Generation (RAG) is a strong method. It mixes the best of both worlds.

The Crawl4AI platform makes it easy to turn any website into data for LLMs. It uses RAG to keep information fresh and reliable. This cuts down on mistakes and boosts response quality.

Crawl4AI helps businesses keep up with AI's fast pace. It offers easy setup and optimization. This way, companies can make the most of generative AI. It's set to change how we work and make decisions.

FAQ

What is the concept of transforming any website into LLM insights instantly?

This concept turns any website into LLM insights quickly. It tackles LLM challenges and highlights RAG's importance. Crawl4AI makes this process easy and efficient.

It also talks about LLM's current state. This includes their limitations and the need for better external knowledge integration.

What are the current limitations of large language models (LLMs)?

LLMs face several challenges today. They struggle with knowledge gaps and keeping up with new information. This affects businesses a lot.

Examples show how these issues hinder business success. They highlight the need for better ways to add external knowledge.

Why do traditional web scraping methods fall short?

Traditional web scraping has its limits. It's not efficient and often requires manual effort. This makes it hard to get the right data.

How does Retrieval-Augmented Generation (RAG) enhance LLM performance?

RAG boosts LLM performance by adding external knowledge. It has key components and faces challenges in implementation. Yet, it greatly improves LLM responses.

It shows how RAG can enhance LLMs and its benefits. This makes LLMs more accurate and helpful.

How can Crawl4AI transform any website into LLM knowledge in seconds?

Crawl4AI quickly turns websites into LLM knowledge. It's fast and easy to use. This makes it a great tool for many.

It transforms websites into markdown data ready for LLMs. Its ease of use is a big plus.

What is the architecture behind Crawl4AI's efficiency?

Crawl4AI's efficiency comes from its architecture. It converts HTML to markdown and filters content. This ensures only relevant data is used.

It also stores and retrieves data efficiently. This makes it a powerful tool for LLMs.

How do I set up my first Crawl4AI project?

Setting up a Crawl4AI project is easy. This guide will walk you through it. It covers installation, configuration, and deployment.

How can I optimize my web crawling strategy with Crawl4AI?

Optimizing web crawling with Crawl4AI is key. This section offers tips for content selection and performance tuning. It also talks about scaling operations.

It provides examples for better crawling. This includes choosing the right content and improving performance.

How can I measure the success of Crawl4AI?

Measuring Crawl4AI's success is important. This section discusses key performance indicators. It shows how to track data quality, crawling speed, and content relevance.